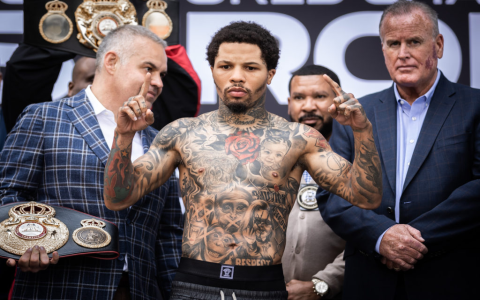

Alright, so yesterday I was messing around, trying to figure out how to squeeze a bit more juice out of my setup. I saw this title, “gervonta davis 140,” and I was like, “Hmm, that sounds interesting. Let’s see what this is all about.” No clue what it meant at first, just a catchy phrase, you know?

I started by digging around online, trying to figure out what “gervonta davis 140” even referred to. Turns out, it’s related to boxing – Gervonta Davis fighting at 140 pounds, the super lightweight division. Okay, cool, but what does that have to do with anything I’m working on? I figured it had to be a metaphor for pushing limits or something.

So, I thought, “Right, let’s take this ‘pushing limits’ thing literally.” I had this old script I’d been meaning to optimize. It was working fine, but it was kinda slow, especially when dealing with larger datasets. It’s written in Python, nothing fancy, just some data processing stuff.

I started profiling the script to see where the bottlenecks were. Used the `cProfile` module – super handy! Turns out, a lot of the time was being spent in these nested loops. Classic, right? I swear, nested loops are the devil.

First thing I tried was vectorization with NumPy. I replaced those slow loops with NumPy array operations. This gave me a decent speedup, but it wasn’t enough. Still felt sluggish.

Then, I remembered reading about Numba, the just-in-time (JIT) compiler. I was like, “What the heck, let’s give it a shot.” I decorated those loop-heavy functions with `@njit`, and BAM! Massive improvement. The script ran way faster.

But I wasn’t satisfied. I wanted to see if I could push it even further. I started looking into parallel processing. Python’s `multiprocessing` module can be a pain to work with, so I decided to try something different: `*`. It’s a bit cleaner, in my opinion.

I refactored the script to use a `ThreadPoolExecutor` to process the data in parallel. This involved breaking down the data into chunks and submitting each chunk to the thread pool. It took a bit of tweaking to get the chunk sizes right, but eventually, I found a sweet spot.

The result? Even more speed! The script was now running several times faster than the original version. It felt like I’d taken it from a sluggish heavyweight to a nimble super lightweight, like Gervonta Davis himself!

It was a fun little exercise. Really drove home the importance of profiling, vectorization, JIT compilation, and parallel processing. Plus, I learned a bit more about boxing in the process. Who knew “gervonta davis 140” could lead to so much optimization?

- Profiling: Used `cProfile` to identify bottlenecks.

- Vectorization: Replaced loops with NumPy array operations.

- JIT Compilation: Used Numba’s `@njit` decorator.

- Parallel Processing: Used `*` with `ThreadPoolExecutor`.

I might try throwing some Cython at it next. See if I can eke out any more performance gains. Stay tuned!